Automation and AI Technology in Regulatory

By John Cogan

I am delighted to be writing this guest blog for Gens & Associates, Steve and I go back a long way and we’ve always had excellent exchanges of ideas and the occasional collaboration.

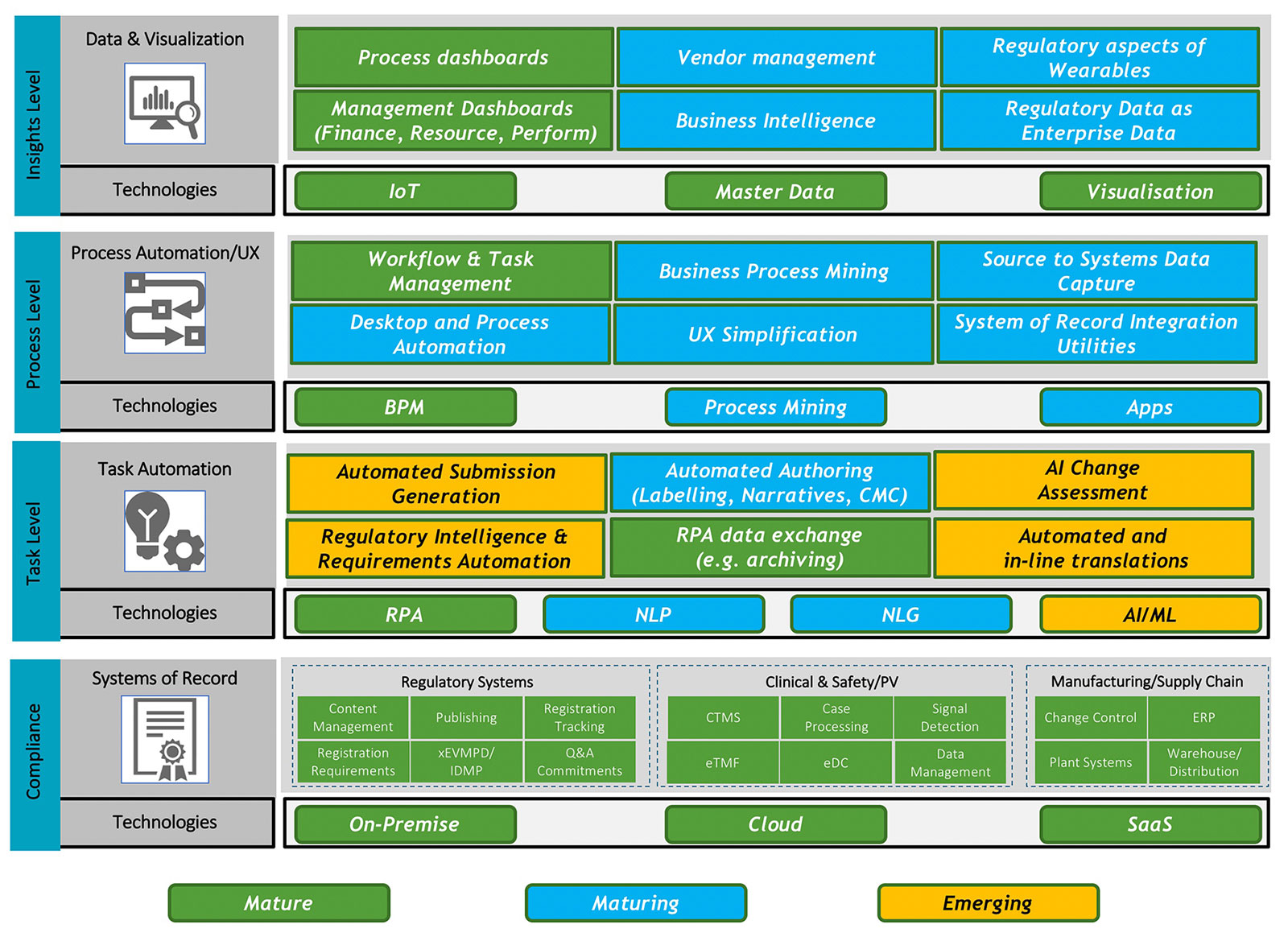

I chose the topic of Automation and AI Technology in Regulatory as this is an area I’ve been active and interested in for almost a decade. And the truth of the matter is, not a great deal has happened beyond some proofs of concept and some isolated production solutions in that time. Let’s start by examining both the technologies in play here and some of the use cases for these. The picture below is a very rich one, I’ve tried to encapsulate the technologies and example uses cases into one picture, grouping them by the type of automation being sought and the maturity of both the technology and the use case. This is my personal construct, I’m sure there are many other ways to tackle this.

As the Gens & Associates Research Team has pointed out in the latest World Class RIM Survey, we are nearing the end of the latest RIM Transformation Wave. There is no doubt that this investment wave over the last decade has consumed a significant amount of the total dollars available to invest in Regulatory technology, with another significant portion being spent on the ever-evolving IDMP requirements including several false dawns.

But it would be lazy to simply assume this is the reason behind the lack of progress in automation and AI in Regulatory. The RIM transformation wave promised some of this, but in reality, has mostly succeeded in simply moving from bespoke, on-premise solutions to more standardised cloud and SaaS solutions. This is a significant achievement across the industry, but it’s becoming clear that Life Sciences organisations and their strategic partners will need to tackle the automation use cases themselves rather than relying on the System of Record vendors to evolve there.

You will notice I referred to both Life Sciences companies and their strategic partners in the previous paragraph. Looking at the automation uses cases in the preceding diagram, I suggest that for many of these, Life Sciences companies rely on their strategic partners to deliver this work today. An old rule of automation is to tackle “high volume, low complexity” tasks for the maximum benefit. But is this the exact same work (e.g., lifecycle management) that Life Sciences companies have outsourced to Strategic Partners or built large scale, in-house offshore “captive” solutions to manage? The question then becomes, who invests in this automation vs. who wants to gain the benefit?

Most (all?) regulatory outsourcing deals are keenly competitive on price, vendors usually signing up to a reduced cost per service over the life of the contract. This is based on potential efficiency gains as the vendor understands the work better, although in practice this is often achieved by simply using cheaper/less experienced resources to do the work. In this world of tight margins, where is the money for investment in innovation? And if the vendor must invest, but the sponsor wants to gain the benefit, where is the incentive? Sponsors will argue that strategic partners should invest because these tools and technologies can be re-used across multiple customers, but vendors know that tools are not fully transferrable from one client to another, plus “build it and they will come” is a difficult sell to senior management who are watching monthly and quarterly revenue and profits.

I would argue that for simpler, more mature technologies such as Robotic Process Automation (RPA) and Business Process Management (BPM) vendors should invest in these tools as the payback is significant. I always think “process” is a misnomer in RPA, because RPA is generally applied to “task” automation while BPM is applied to end-to-end process automation. But as we move up the complexity curve, using natural language processing (NLP) or natural language generation (NLG) and eventually into Machine Learning (ML) and true AI, the critical successful factor is to have access to large amounts of relevant data to train these tools and algorithms. And it is the Sponsors who have this data in abundance.

Here is a real example. The potential to use NLG for narrative writing automation in safety reporting is a strong use case. Clinical studies can require thousands of narratives to be written, all following a basic pattern, but one in which boilerplate, RPA approaches just don’t work. For this very specific example, a vendor needs access to thousands of historical, human-written narratives to train the algorithms. Will Sponsors be prepared to give up these data? Hopefully yes in return for a share in the reduced cost to narrative writing. But if your narrative writing is already largely offshore using highly skilled but cost-effective medical writers, does the business case stack up?

Another use case making a comeback is the potential to use technology to improve regulatory intelligence and regulatory requirements management. Most efforts to date have focussed on requirements databases, often maintained using a network of local and regional regulatory experts (either in-house or outsourced). Subscription database providers generally provide the standard regulatory authority requirements, while Sponsors want to be able to add their own local nuances and product specific learnings. So, what is preventing these two needs coming together as one, can subscription providers also provide that local requirements intelligence or is it really so nuanced to each Sponsor’s products and therapeutic settings? At the Intelligence end of the spectrum, newer technology organisations are focused on “precedent analysis”, an exciting development analysing the historical decisions made by major regulatory authorities to improve regulatory strategy for future submissions. It will be interesting to see how the business models and adoption of these technologies take shape.

There are several industry groups including DIA, IRISS, and HeRO Forum, where Regulatory automation and AI technology use cases are discussed and demonstrated, but I believe that unless we delve much further into the economics of automation and the balance between investment and reward, the benefits of automation in Regulatory will not be delivered. Or maybe the economically viable use cases don’t actually exist in Regulatory and the dollars are better spent in Clinical and Safety?

John Cogan is the founder of JPC Advisory Limited (www.jpcadvisory.co.uk) and an industry veteran with over 30 years of experience in regulatory processes and technology, both on the Sponsor side and the strategic vendor side. We’d love to hear your thoughts on the topics raised in this blog, send us your questions and comments or contact the author directly (john@cogan-mail.com)